Dieser Artikel ist auch auf Deutsch verfügbar

Title: No future-proof architectures! Subtitle: Why future-proofing should not be an architecture design goal. Language: en Author: Eberhard Wolff Date: 2023–07–11 published_at: 2023–07–11 Keywords: software-architecture Publisher: S&S Media-Verlag Publication: Java Magazin Source link: https://entwickler.de/software-architektur/keine-zukunftssicheren-softwarearchitekturen-yagni-prinzip Source note: This article originally appeared in edition 04/2023 of the publication JavaMagazin. It is republished on innoq.com with the kind permission of the publishing company S&S Media.

Martin Fowler has defined software architecture as decisions that are at the same time important and difficult to change (YouTube video). From this, we could infer that we must never alter an ideal, future-proof software architecture since that avoids the difficult changes. But that isn’t really what Martin Fowler has in mind. This article describes why future-proof architecture in this sense is not only unachievable but an impractical goal.

A story

Let’s take a look at an example: A decision is made to migrate a system to a new architecture, module by module. The process is technically validated with the help of a prototype. This approach makes sense because a step-by-step migration is actually always appropriate, and bringing a technical risk under control with a prototype is a good idea in cases of higher risk.

After starting the project, the team learns more about the nature of the domain, the requirements and the existing system. The new perspective makes it clear that a module-by-module migration will make it impossible to implement the desired new domain-specific assistance for the users. A number of other problems are also encountered, and as a result, the project is halted and considered a failure.

Lesson learned?

As with every failure, it is important to ask how to prevent such a result in the future. One possible conclusion to draw is that projects should establish more safeguards in the future. Stricter prerequisites for approving projects could be defined. The implemented prototype is already a step in that direction. Specifically, the architecture in the example would have to define a migration capable of enabling the desired support for the users before the launch of the project so that the architecture would not have to be tossed aside so quickly.

In my opinion, this conclusion is incorrect – however logical it may sound. The correct response is to always revise the architecture when new facts are discovered. There are a number of reasons for this:

Software builds a model of the domain. Business rules, business objects and other elements of the domain are depicted in software. This depiction is never perfect. Developers continuously learn new facts about the domain. They must adapt their model and therefore also the software itself. This learning process is unavoidable. No one can be simply commanded to immediately understand the domain completely. This necessitates an iterative process, in which the developers continuously adapt to what they learn.

Requirements change. Such changes can arise through transformation of the business. By working with and reflecting on the software, users can arrive at new ideas as to how the software can support the work processes even better.

Teams learn how to design architectures. I posted a survey on this topic on Twitter and Mastodon. The large majority of respondents reported participating in the design of fewer than ten architectures. This survey may not be representative, but similar surveys taken, for instance, while dividing up groups during trainings have revealed that participants have worked on even lower numbers of architectures. That is to be expected. After all, people typically work for a long time – several years, for example – on a project with a given architecture. Logically, it is therefore not possible for anyone to participate in the creation of all that many architectures.

But even if someone worked constantly on architectures, the industry itself is continuously learning about architectures. This should not come as a surprise. We all know that software architecture is anything but a simple topic. Engaging with new software architecture approaches is an important part of our work. To discover ideas and learn from the experience of others, we read and write articles, attend conferences and engage in other forms of discourse. But this also means that the software architecture design we are currently developing will look different in the future, and we may even need to modify an already existing architecture.

In other words, as we learn to better understand the domain, the requirements change, and we learn how to design better architectures. We can therefore expect architecture to need improvement – and that will always be true, not just at the start of a project. It is even the case that highly complex systems can only be developed by first designing a simple system and then improving upon it. Any attempt to design an architecture for a complex system in a single go is, in fact, destined to fail.

In the example project, better safeguards from the start would not help much. Even if the team identified and eliminated more hurdles before the start of the next project, this would only result in them discovering something else that was not properly modeled in the current architecture. Then they would need to fundamentally alter the architecture for this reason. It might even be better to establish fewer safeguards at the outset. A prototype was built during the project to validate the module-by-module migration approach. This was then later discarded due to the nature of the domain. In other words, the prototype could have been skipped.

The lesson learned should therefore be different: Poor software architectures can hardly be avoided through greater safeguards. We must rely on iteration in the development of architecture.

How much architecture do we need?

This gives rise to the question of how much architecture must be defined at the start of a project at all. Tobias Goeschel takes a very radical approach here, which he calls “domain prototyping” (episode 134 of the live discussion series “Software-Architektur im Stream”). The project begins with the minimum possible technology stack. These days, projects typically make use of a database and a web server. Domain prototyping initially dispenses even with these technologies – and naturally also with messaging solutions such as Kafka or other advanced technologies. This yields a system that runs as a complete application on only a single computer and makes use of a rudimentary technology stack. This allows developers to focus only on the business logic and how it is structured. Changes to the business logic are simple to implement because no technology code needs to be adapted. Not only can developers focus entirely on the business logic, any necessary changes to the logic are very easy to make. The resulting architecture then structures the business logic. This is an important aspect of the software architecture since it provides particularly effective support for domain-specific changes, making it more important even than many technical aspects of an architecture that otherwise tend to draw the attention of developers.

Technologies such as databases are then only introduced when they are required. At this point, the teams already have experience with the business logic. Selecting the appropriate technology becomes easier as a result. After all, there are many different NoSQL databases as well as relational databases. And they all have different properties. If you better understand what you need to implement, you can select the technology with properties that are especially advantageous for the business logic. In the example project, the migration method should presumably have been chosen only after the developers had some experience with the business logic. A more appropriate migration approach would have been selected this way.

Last responsible moment

Domain prototyping is a very radical approach. In every project and for every question, however, it is possible to consider the last moment at which a decision can still be made responsibly, in other words the last responsible moment. This is a generalization of the question as to how much must be decided at the start of a project. In general, a later decision has the advantage that more facts are known, meaning that a better decision can be made. Nevertheless, there is a problem with this concept: It is subjective and relies on the courage of the participants. Whether it would have made a difference in our example project is therefore unclear. In that case, the decision for a migration strategy was backed up with a prototype, which tends to indicate a less courageous approach. In such a context, it does not appear tremendously likely that anyone would find the courage to put off the decision on a migration strategy long enough for new insights into the domain to point the way to a different strategy.

YAGNI

Another related concept is YAGNI (“You Ain’t Gonna Need It”). This principle of extreme programming (XP) says that functionality should only be built into a system if it is truly needed. YAGNI is a type of brainwashing to prevent BDUF (“Big Design Upfront”). Precisely because it is impossible to predict future decisions, BDUF is not a good idea. In other words, YAGNI fits perfectly into the points made above.

Nevertheless, YAGNI can sometimes be too radical. It can cause information to be ignored. If you are aware of a potential requirement, you are certainly entitled to examine it and consider what impact an implementation of this requirement might have. Whether you eventually take this requirement into account in the actual architecture is another question.

But what might a less radical approach look like?

A view of the peak

One analogy is an expedition to summit a mountain. Successfully reaching the peak stands here for successful completion of the project. When it comes to architecture, the focus is often on the ultimate architecture for the final state of the project. This makes sense, of course, since the architecture is expected to solve all of these problems. Even on an expedition, it is useful to look up to see how far away the peak still is. But it is also important on an expedition – perhaps even more important – to take each next step and to overcome the next obstacle – such as a river. It should be the same with architecture. The architecture must first and foremost provide support for the current challenges, such as implementing new features. You should not lose sight of the ultimate architecture, but it is secondary and only relevant over the long term.

Another useful acronym might be DBS (“Don’t Be Stupid”). Changes that will very probably take place should be considered already during the initial draft of the architecture. But the focus should lie on the features and objectives to be implemented now, not so much on a “perfect” and apparently future-proof final architecture. In the end, even this will need to be tweaked and adapted to the current requirements, which is only possible based on the insights gained from today’s challenges.

Future proof? No, thank you

We often have to deal with suboptimal architectures. One important hypothesis of this article is that these architectures did not arise because the initial architectural design was suboptimal, but because the architecture was not adapted over time.

A future-proof architecture requires extensive thought and effort. When a feature must be implemented in a “future-proof architecture,” the developers will presumably strive to do so without making changes to the architecture. Otherwise, the architecture is clearly not future-proof. The “future-proof” architecture then becomes an excuse not to make truly necessary changes.

If the project is approached under the paradigm of iterative architecture development, however, every change will prompt the question of whether the architecture is still a good fit or whether it must be modified or even completely revamped. The absence of a bias towards retaining the architecture will therefore lead to a better architecture in the end.

As in the example project, a prototype sometimes encourages people to hold firm to a specific architecture. After all, a prototype represents a large financial and emotional investment in the architecture. It can become very difficult to admit that the architecture is no longer fit for purpose and must be changed.

This leads to a paradox: The focus on a “future-proof” architecture leads directly to an architecture that is not future-proof. The argument is essentially a psychological one. Working on an optimally “future-proof’ architecture causes developers to put off actually necessary adaptations because they are too invested in the “future-proof” architecture.

No rules of thumb!

The focus on a “future-proof” architecture is not the only problematic bias. YAGNI is another example. Rules of thumb, such as whether a module should be small or large, can be counterproductive. Architecture decisions should always be justified. Rules of thumbs can serve to cut discussions short, resulting in reliance on unconsidered rules of thumb to justify a decision.

Moreover, architecture decisions must also be revised if changes occur that nullify the original justification. A rule of thumb, however, leaves the specific justification unclear, which also makes it harder to identify the point in time when the decision should be revised.

The result

As a result, we can expect an architecture that is burdened with some historical errors and provides the best possible support for the current requirements. It may not be aesthetically elegant, but that it not actually a relevant criterion for software architecture. It “only” has to support the business functionality as well as possible.

The structure

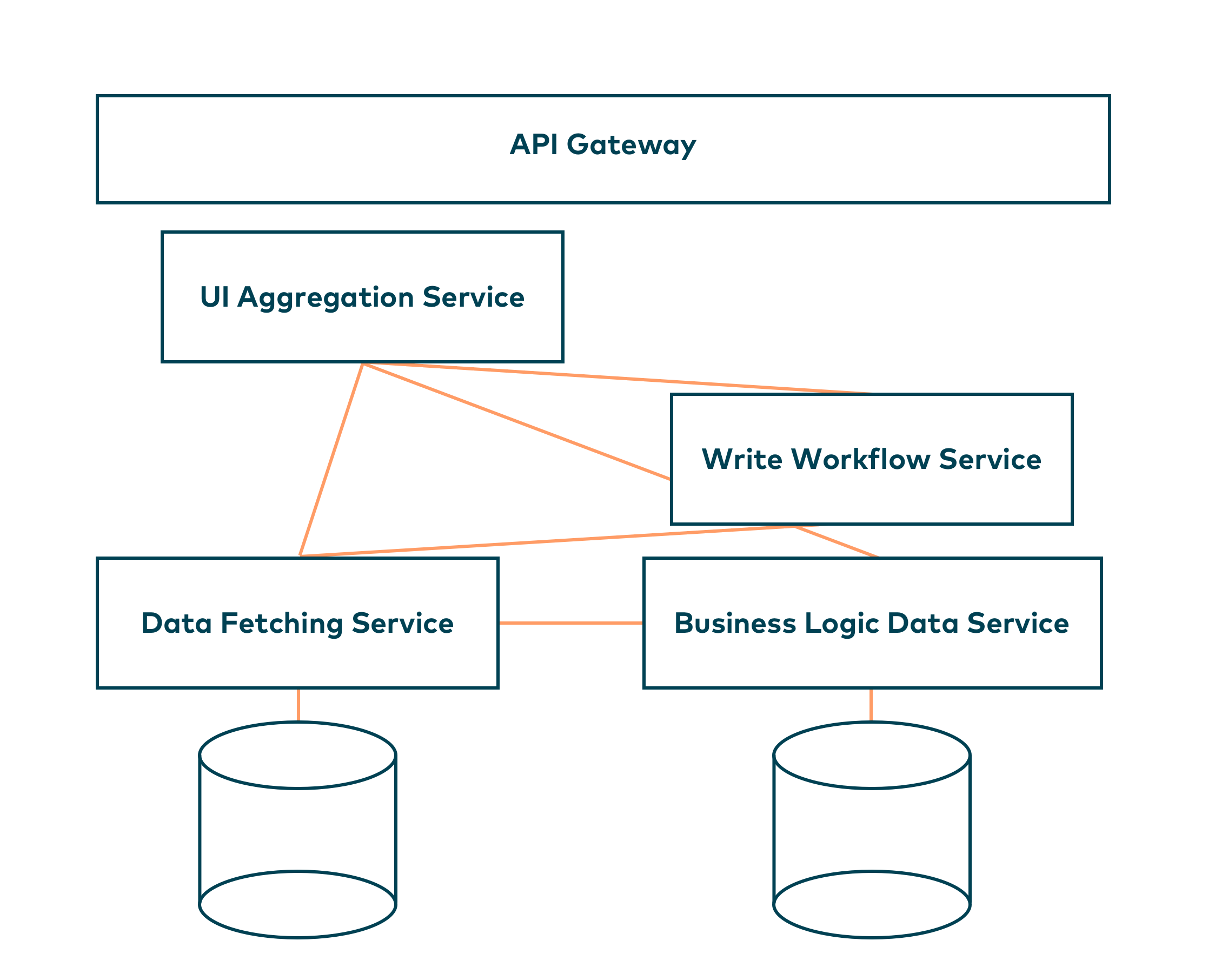

The structure of a system is an important component of the architecture, perhaps even the central one. This often involves a structure such as shown in Fig. 1. The question is then whether this structure is good or bad – and whether it is future-proof. The problem with future proofness is that future changes cannot be foreseen. We can expect that changes to the structure may also become necessary. In other words, it appears impossible to design a future-proof structure.

It would certainly be nice if the structure were simple and easy to understand. This also makes it easy to change because making changes requires comprehension of the structure. But a true evaluation is only possible after a few changes have taken place. Even if it is possible in principle to determine whether a structure is easy to change, how can you design a specific structure such that it is easily changed and understood?

In the domain-driven design (DDD) approach, the domain itself determines the design, meaning that the architecture should reflect the domain as well. The structure therefore conforms to the respective subject matter. It is not simply generic and does not feature any unnecessary flexibility. Generic design and flexibility are approaches often employed in the attempt to make architecture future-proof.

We can evaluate the structure in Fig. 1 from this perspective. There is no clear indication of the domain the structure is intended for. It could be used for the server components of a self-driving car or the backend of a computer game. It is purely technical, which most likely makes it an important aspect of the design. But often such a technical structure is all that is understood by the structure of a system.

Another aspect can be seen in the domain structure, as in Fig. 2. This is stable: As long as customers order things and are billed for them, followed by delivery of those things, this breakdown makes sense. It should provide ideal support for changes arising in the domain because it is consistently based on that domain.

Technologies

Technologies are a means to an end. What, then, is the value of modern technologies? They lead to systems that are easy to change. In truth, however, the modifiability depends primarily on how well the developers know the respective technologies. Technologies can influence certain quality features of the system. For example, if a technology has aged to a point where updates are no longer released, this can lead to a security problem.

In the field of software development, new technologies are continuously created. And, as already mentioned, old technologies eventually become no longer tenable due to security issues. Projects must therefore be prepared to switch to a new technology at some point.

For this reason, it makes sense to design the system code in a technology-independent way. But a change of technology only happens rarely. Perhaps a system will be migrated to a new database once or twice. The vast majority of changes will be domain-related. Plus, a change of technology often has domain-specific impacts. If an application should be available not only on the desktop but on mobile devices as well, this can open up new possibilities for features that are relevant to the domain. For example, a phone can be used to scan the barcodes of products. This feature makes little sense on the desktop.

Most consulting jobs are about migrating a system with old technology and old logic. In these cases, the logic is often poorly structured and hard to update. If this code had been technology-independent and easy to port to a new technology, it would presumably be rewritten anyway since the quality is so poor. In other words, technology-independent code does not offer much in terms of future proofness. Nevertheless, it is still worthwhile to write technology-independent code because it is easier to understand, making it easier to modify as well.

Whether it is always necessary to use “new” technologies is an open question. It is important to consider whether developers are still able to work productively with a technology. This depends on the specific teams. What looks to one team like a technology from the Stone Age is perfectly acceptable to another team. This can even be turned into an advantage: Instead of investing in migration to a new technology, the developers can be trained in the technology currently in use, or new developers with skills in the respective technology can be hired. Of course, this won’t help if there are no more security updates for the technology.

For a more profound look, try watching the stream recording on this topic.